Fear and Loathing in Comfy UI: A Savage Guide for Beginners

A desperate, sweat-soaked dive into the node-based madness of Comfy UI, the raw, chaotic, high-voltage heart of Stable Diffusion image generation. Not for the faint of heart or weak of stomach.

Published by slopnation • April 27, 2025

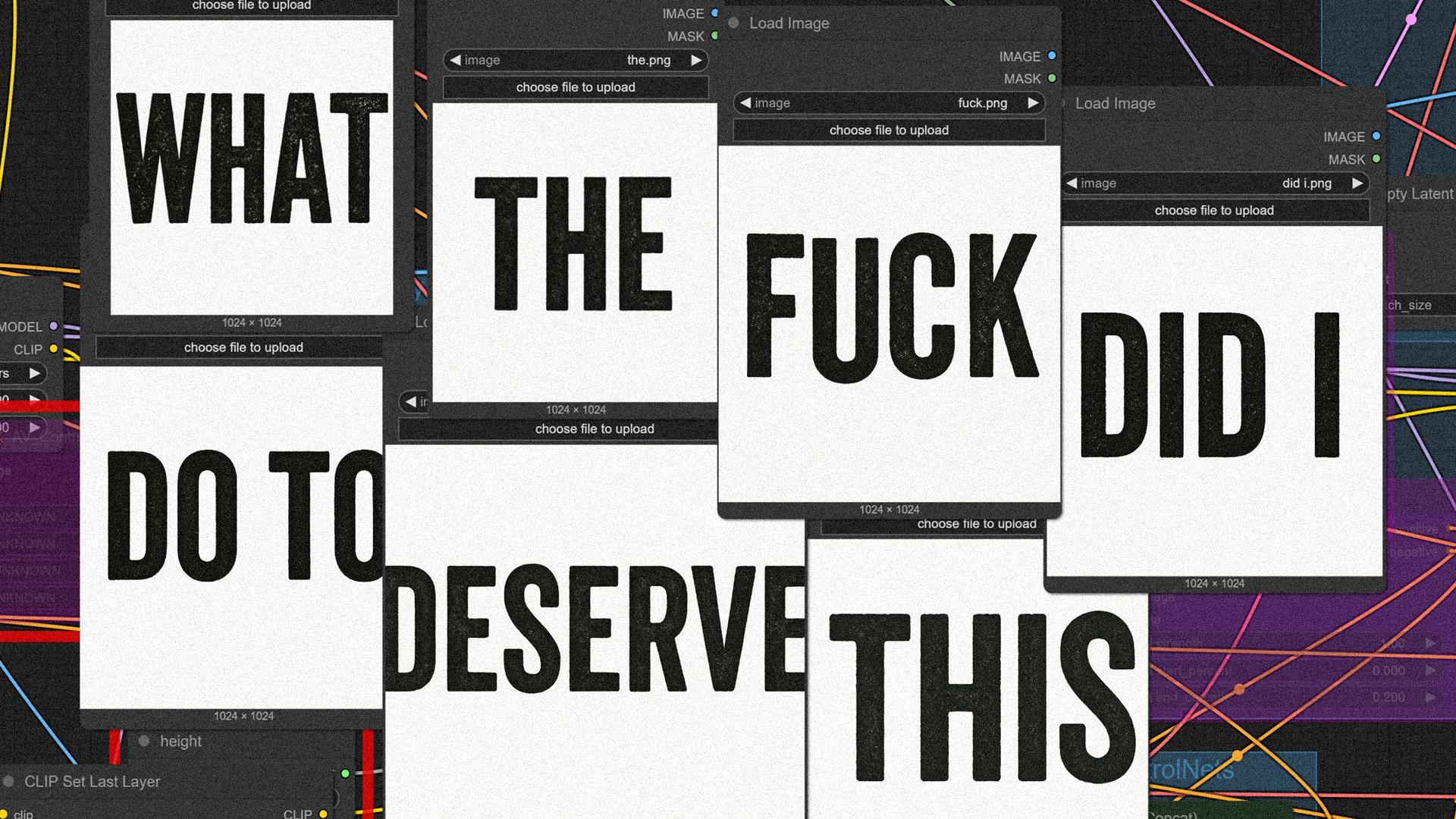

Alright, buckle up, you poor doomed bastard. Forget the slick, user-friendly poison they peddle on the main drag – the interfaces designed by grinning corporate eunuchs to hide the awful, snarling truth beneath the hood. We’re talking about Comfy UI now, a different species of beast altogether. This isn’t some gentle introduction; this is a headfirst plunge into the Guts of the Machine, a wiring diagram straight from a terminal ward nightmare. You wanted to see the engine? Fine. But don’t come crying to me when the gears grind your sanity to a fine pink mist under this cold Swiss moon. It’s nearly 11 PM on a Sunday night, the respectable burghers are asleep, and we’re staring into the howling digital void… perfect.

They call it a “node-based graphical user interface” for Stable Diffusion. Translation: it’s a goddamn mess of boxes and wires, a psychedelic roadmap of the image generation process that looks less like software and more like the frantic scribblings on a lunatic asylum wall. Forget sliders and simple buttons. This is raw exposure. This is mainlineing the machine logic.

The Twisted Guts: Nodes, Wires, and the Howling Void

First, you gotta wrap your fried brain around the Nodes. These are the rectangular boxes, the pulsating organs of this infernal machine. Each one performs some specific, often arcane, function. One loads the heavy-duty hallucinogen (the Load Checkpoint node, containing the main AI model), another tries to decipher your fevered textual demands (the CLIPTextEncode nodes for prompts), one actually does the brutal, high-speed work of churning noise into something (the KSampler), another translates the raw psychic static into a visible image (VAE Decode), and yet another saves the fleeting evidence (Save Image). Hundreds of these bastards exist, each a tiny cog in a potentially massive engine of digital chaos.

Then there are the Wires (or “edges,” if you’re feeling formal, which you shouldn’t be). These spidery connections link the nodes, carrying the data—the juice—from one box’s output to another’s input. Output on the right, input on the left. You connect them, dragging lines like a crazed surgeon stitching together monstrous creations. It’s a visual representation of the data flow, meaning you see exactly how the signal—or the madness—propagates through your setup. There’s a terrible beauty in it, like watching a train wreck in slow motion. It’s transparent, alright. You see everything, especially the failures.

The Basic Ritual: Conjuring Visions from the Ether (Text-to-Image)

Alright, let’s map out the simplest path through this jungle, the basic text-to-image ritual. Don’t expect comfort, just function… maybe.

Load Checkpoint: First, you gotta slam the main drug into the machine. This node points to the massive model file (.safetensorsusually) – the brain, the core programming, the heavy chemical base that dictates the trip’s flavor.CLIPTextEncode (Positive Prompt): Now you scream your desires into the abyss. Type what you want to see in this box. Be specific, but remember you’re talking to a machine that thinks in alien logic.CLIPTextEncode (Negative Prompt): And here, you list the horrors, the creeping dreads, the visual garbage you want to keep out of the final vision. Ward off the digital demons. These two nodes translate your pathetic human words into “conditioning,” fuel for the next stage.KSampler: The savage heart of the journey. This node takes the Model, the Positive and Negative conditioning, and a dose of pure random noise (usually from anEmptyLatentImagenode you have to add). Then it starts the frantic dance, iterating step by agonizing step, guided by settings likesteps(how many refinement loops),cfg(how much it really listens to you),sampler_name(the specific algorithm for noise-wrangling, likeEulerorDPM++ 2M Karras– pick your poison), trying to bash that noise into the shape of your prompt. It’s a high-wire act over a pit of digital crocodiles.VAE Decode: What comes out of the KSampler is raw “latent” data – unformed potential, psychic static. The VAE Decode node acts like a filter, a translator, taking that incomprehensible mess and forcing it into the shape of a picture your feeble human eyes can understand. It needs a VAE model to do this, often included in the Checkpoint, but sometimes you wire in a separate one hoping for less visual distortion.Save Image: If you survived, if the process didn’t crash and burn, this node grabs the final image from the VAE Decode and pins it down, saving it to your hard drive. Proof. Evidence. Something to clutch onto when the flashbacks start.

Connecting them? Outputs to inputs. Model to Sampler, CLIP to Text Encoders, Text Encoders to Sampler’s positive/negative inputs, Sampler’s latent output to VAE Decode, VAE Decode’s image output to Save Image. It’s a goddamn cat’s cradle designed by a schizophrenic.

Know Your Poisons: A Quick Look at the Key Nodes

- Checkpoint: The main stash. Heavy stuff. Dictates the overall style and capability.

- CLIP: The translator. Tries to bridge the gap between your words and the machine’s alien thoughts. Often fails.

- VAE: The image filter. Turns latent nightmares into pixels. Can introduce its own weirdness.

- KSampler: The engine of chaos. Where the noise gets wrestled into submission… or escapes containment.

The Ordeal: Wrestling the Beast into Submission (Installation)

Getting this monster onto your machine is its own special kind of hell-trip. Forget simple installers. Usually, you’re grabbing strange code artifacts from the digital black market (GitHub: github.com/comfyanonymous/ComfyUI).

- The “Easy” Path (Lies): Maybe you find a “portable” version for Windows. Download a massive

.7zfile, wrestle with7-Ziplike you’re cracking a safe, pray the included.batfile actually runs without unleashing digital locusts. - The Highway of Pain (Manual): More likely, you’re installing

Python(get the right version, or suffer!), installingGit(a tool for code sorcerers), cloning repositories with arcanegit clonecommands, then battling the command line, feeding itpip install -r requirements.txtlike offerings to a vengeful god. You’ll needPyTorchtoo, specifically compiled for your GPU’s dark religion (Nvidia CUDA? AMD ROCm? Mac Silicon voodoo?). Expect errors. Expect despair. - Feeding the Beast: Remember, Comfy UI itself is just the engine block. You still need the fuel – the Checkpoint models, maybe custom VAEs, LoRAs, embeddings. Download these gigabyte-sized chunks of data and shove them into the correct subdirectories (

models/checkpoints,models/vae, etc.). - The Manager Maybe: Some setups include, or you can add, the

ComfyUI Manager. A custom node itself, a frantic attempt to impose order by letting you install other custom nodes and models from within the UI. A necessary evil, perhaps.

Prepare for pain. Prepare for cryptic error messages spat out by the command line like curses. This is not plug-and-play; this is wrestling with raw, unstable technology.

Screaming Into the Ether: When the Bats Come (Troubleshooting)

It will break. Nodes will turn red, screaming errors. Connections will refuse to link. Images will emerge as unholy abominations. Where do you turn in this digital maelstrom?

- GitHub Issues: A graveyard of broken dreams and unsolved problems. You might find someone else who suffered your specific torment, but rarely a clean solution.

- Tutorials & Guides: Scattered across the web like fragments of a lost map. Some written by lunatics, some by saints, most barely coherent. Consume with caution. (Check

stable-diffusion-art.com,comfyui-wiki.com, maybe YouTube if you can stand the voices). - Discord/Forums: Digital asylums where other inmates gibber about workflows and share paranoid theories. Sometimes a useful shard of information glints through the madness.

Don’t expect a friendly helpdesk. You opted for the raw wiring; you get to trace the shorts yourself. You’re alone with the machine and its terrible potential for failure.

Final Transmission: Buy the Ticket, Take the Ride… Good Luck

So that’s Comfy UI. It’s not comfortable. It’s raw, naked, nerve-shredding exposure to the chaotic heart of AI image generation. It rips away the comforting lies of simpler UIs and forces you stare into the tangled, chaotic, node-based heart of the process.

Why endure this madness? Control. Raw, absolute, terrifying control over nearly every variable. Flexibility. Build workflows as simple or as monstrously complex as your deranged mind can conceive. Transparency. You see exactly what connects to what, where the signal flows, where the bottlenecks choke, where the errors bloom.

It’s not for the sane. It’s for the freaks, the experimenters, the control freaks, the ones who need to understand the wiring, even if it electrocutes them. It’s harder, yes. It’s intimidating, absolutely. It will chew you up and spit you out if you’re not careful.

But it’s real. It’s the unvarnished truth of how these images get conjured from noise and prompts. If you’re ready for that kind of intensity… well, buy the ticket. Take the ride. But just remember… we can’t stop here. This is bat country. Good luck, you poor bastard. You’ll need it.